Ever been hit with those moments where your microservices are running into slowdowns, and you're staring at logs wondering what's wrong? Yeah, that’s where caching in microservices step into the spotlight. Think of it as giving your system a smart wrench—no more digging through the entire toolbox every single time. Instead, it retrieves data quickly from a nearby cache, making everything snappy, efficient, and way less frustrating.

Picture your typical e-commerce platform, loaded with countless product requests. Without caching, every user inquiry might trigger a full database fetch, which turns into a huge bottleneck. Now, toss in caching: instant access to frequently requested data, like bestsellers or ongoing promotions. It’s like having a shelf full of the most popular items right next to you—no walking down endless aisles every time you want something.

But why stop there? Caching strategies in microservices are not just about tossing data into a cache and hoping for the best. They’re about choosing the right tools for the job. Are you considering in-memory caching for lightning-fast access? Or maybe a distributed cache for scalability across multiple nodes? Each choice influences performance and consistency. When does consistency become critical? Well, imagine if your stock counts aren’t updating immediately after sales—people might buy things they thought were in stock, leading to chaos. Define what data needs to be “fresh” and what’s okay to be a little delayed.

Here’s a question: How do you decide what should be cached? Think about data that gets requested over and over—product details, user sessions, recent activities. These are prime candidates. Also, understanding cache invalidation is key. Do you refresh data on certain triggers, or set time-to-live parameters? Sometimes, a quick cache refresh trumps waiting for a full database sync, especially during high traffic. And don’t forget, cache size matters; too small, and you miss hits. Too large, and you waste memory.

Ever seen a microservice architecture where caching single-handedly makes or breaks the user experience? It’s pretty wild. When done right, caching can even reduce server costs, cut response times to milliseconds, and dramatically improve user satisfaction. When it’s messy, well, you might end up with stale data or, worse, increased server load due to retries.

So, it’s not just about slapping a cache into your microservices. It’s about crafting a sophisticated, thoughtful approach—balancing speed, data consistency, and resource management. Whether you’re just dipping your toes or fully diving into microservice architecture, understanding caching is like unlocking a secret weapon. It’s not magic—it’s smart engineering that turns slow, clunky systems into streamlined, rapid-fire responses. And isn’t that what makes a great system great?

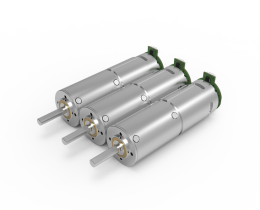

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.