Ever been stuck waiting for a service to load, only to realize the real slowdown is happening somewhere deeper in the tech stack? That’s where caching in microservices really shows its power. Imagine a bustling city where traffic congestion is a nightmare. Caching acts like a smart traffic light system—organizing flow, reducing bottlenecks, and making everything smoother.

Microservices architectures have become the backbone for scalable, flexible applications. But with all those tiny services communicating constantly, latency can creep in. Every request bouncing back and forth? That’s time lost. Pick up the wrong data from a distant database? More delay. Caching swoops in like a hero—temporarily storing frequently accessed data close to where it’s needed most, drastically cutting down response times.

Here’s a common scene: a user hits an e-commerce site for product details. Without caching, that request might need to go to a database, process, then come back with results. With smart caching, that same data lives nearby—ready to serve instantly. Customers feel it. They get what they want faster, leaving behind the frustration of slow pages.

But how does cache management work under the hood? It's a balancing act. Too much caching, and you risk serving stale data. Too little, and you miss the performance boost. That’s why intelligent invalidation strategies, like expiring cache after a certain time or updating cache upon data change, are huge.

Another thing—scale. When a microservice runs in multiple instances, caching must stay in sync. That’s no small feat. Technologies like distributed caches—think Redis or Memcached—step in, ensuring data consistency across servers. This way, no matter how much traffic hits the system, each user gets the latest, fastest response possible.

What about troubleshooting? Sometimes, cached data can cause confusion—old info stuck around in a new request. That’s where monitoring cache hit ratios and performing periodic cache purges come into play. It’s all about tuning, really. Fine-tuning that cache size and expiration times to match real-world usage patterns.

Got me thinking: is there a secret sauce here? A magic formula? Not quite. But combining intelligent cache strategies with robust monitoring and automation makes all the difference. It’s like having a living, breathing system that learns what it can keep close and what needs to be refreshed.

At the end of the day, performance isn’t just about faster servers or better code. It’s about smarter data handling—knowing what to keep close and when to fetch fresh info. That’s the real magic of caching in microservices. It transforms sluggish, clunky backgrounds into a lightning-fast experience. No more waiting, just seamless, instant interactions. And trust me, end users will notice that shift — because it feels like magic, often for no extra effort.

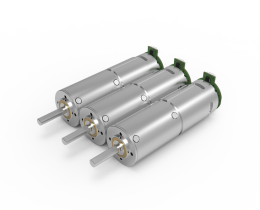

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.