Ever been stuck watching your microservices slow down just because of a ping-pong game with the database? Yeah, that nagging lag where your users start feeling the wait. That’s where caching in microservice design patterns throws a life raft. Think about it—why fetch the same data repeatedly from a database when you can keep a snapshot closer, faster? It’s like having a secret stash of your favorite snack right in your desk drawer instead of hunting down the vending machine every single time.

Picture this: a user requests information that rarely changes, like product categories or user profiles. Without caching, every one of those requests goes back to the server. Over time, that’s a recipe for sluggishness, especially when traffic ramps up. Adding a caching layer — that’s where the magic begins. It reduces latency, cuts down server load, and makes everything feel snappier. Plus, it gives your system a break when traffic spikes unexpectedly, preventing crashes or slowdowns.

Here's a question — does caching really work that well with microservices? Absolutely. The key is choosing the right pattern. For small, frequently accessed data, in-memory caching often does the trick. Need something more robust? Distributed caches spread across multiple nodes keep your data synchronized without bottlenecking your system. And if data freshness is vital? You can set expiration times or use cache invalidation strategies that refresh only what’s needed, not everything.

But wait, isn’t there a risk of staleness? Sure, that’s a concern. The trick is finding a balance — cache too little, and you lose speed; cache too much, and your info might be outdated. Smart cache management—like LRU (Least Recently Used) or time-based expiration—helps keep data fresh without overwhelming your servers. It’s a dance, really, between speed and accuracy.

Ever wondered what happens when caching is misused? Imagine caching sensitive info insecurely or overcaching, leading to memory bloat. That’s why planning your cache strategy is important—don’t just wing it. Think about what needs to be quick, what can go stale, and how to keep your cache healthy over time.

Implementing caching in your microservices isn’t just tech talk. It’s a game changer. When done right, users notice the difference—speed, responsiveness, a smoother experience. Internally, your servers breathe easier, and you get more efficiency out of your infrastructure. It’s like giving your microservice architecture a turbo boost without rewriting the entire thing.

So, if you crave faster performance, reduced server strain, and happy users, diving into caching strategies is the move. No more bottlenecks, no more sluggish responses. Instead, picture a system that’s swift, reliable, and ready for anything. And honestly, isn’t that what all of us want?

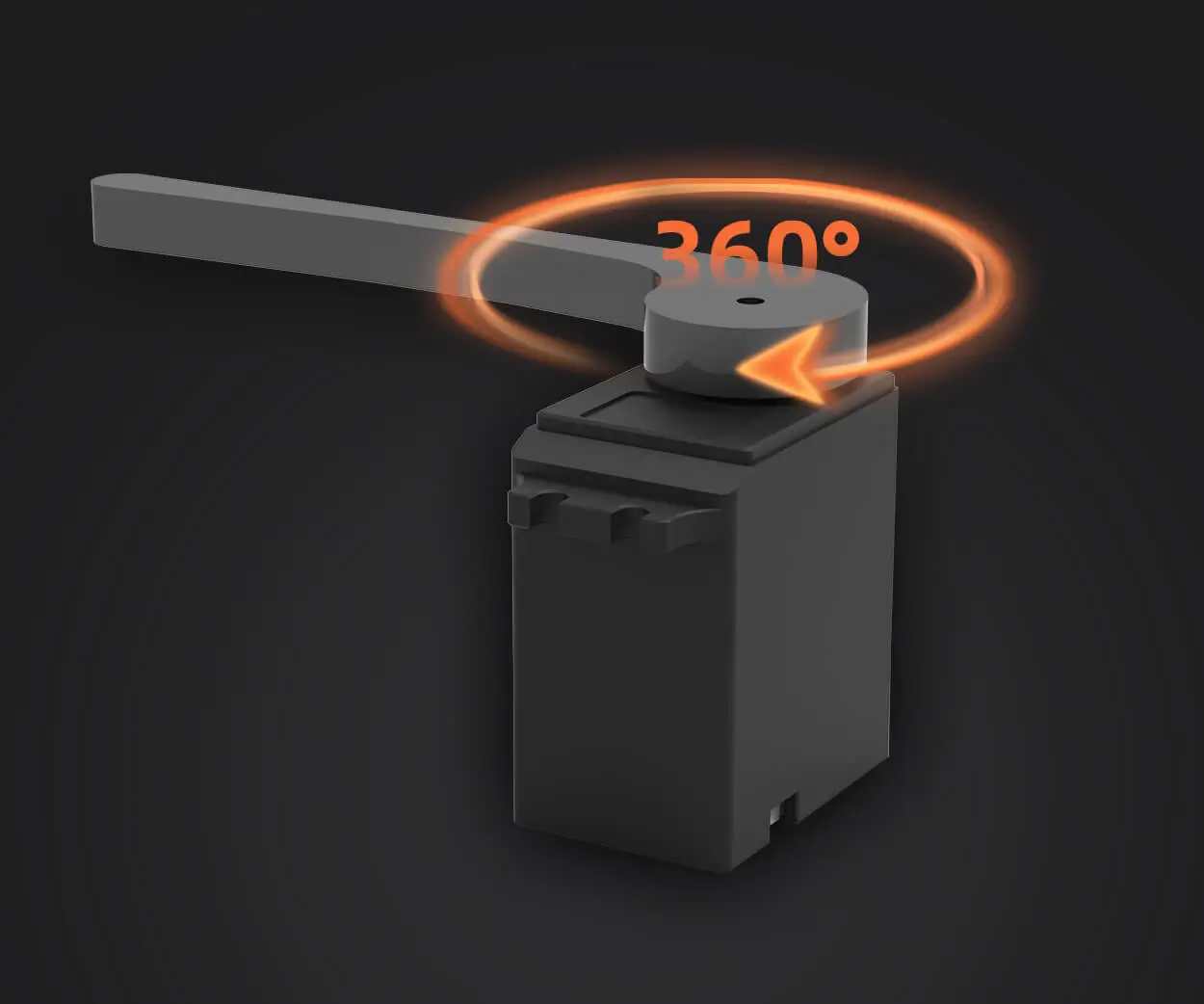

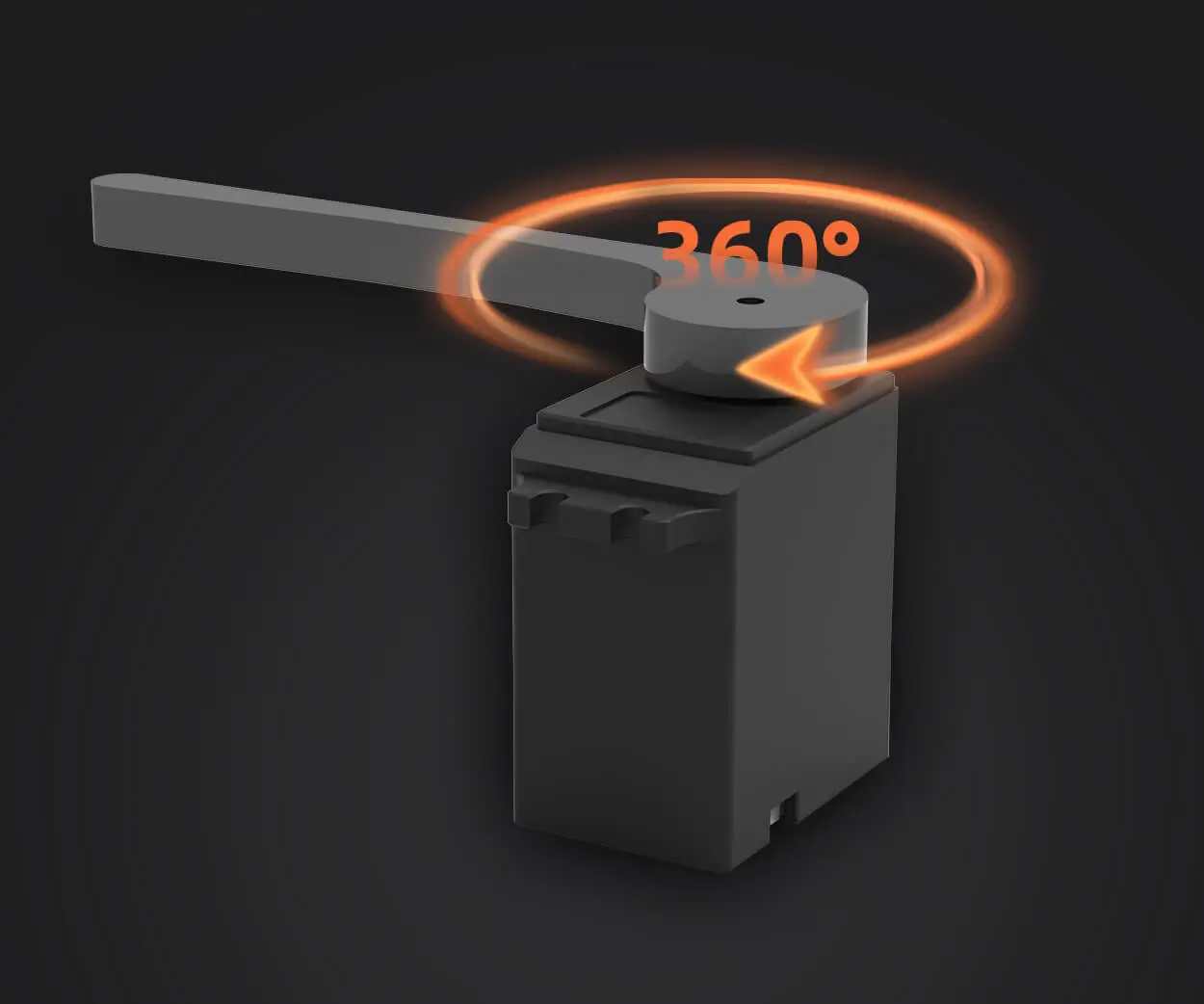

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.