Imagine digging into a bustling e-commerce platform—and suddenly realizing your system’s just not fast enough. Customers hover over spinning icons, and your server logs reveal sluggish response times. What’s missing? Caching in microservices—an unassuming hero that can totally transform performance, but done right, not just thrown in.

.webp)

Let’s talk about how caching actually works in a microservices setup. Picture each microservice as a tiny, specialized shop inside a giant market. Instead of running to the big warehouse every time someone wants an item, why not keep a quick-access shelf for the most popular products? That’s essentially what caching does: it keeps those often-accessed responses close by, reducing the load on the entire system.

Now, some might ask: “Is caching just a quick fix?” Nope, it’s more like a smart layer—if used wisely. For example, say you have a catalog service that fetches product info. Caching popular product details can halve the response time during peak hours. But, what if a product price changes? You don’t want stale info. That’s where time-to-live (TTL) settings come into play, making sure data isn’t just cached forever but refreshed periodically.

Let’s throw in another scenario. You’re running a travel booking platform, and users are browsing flight options. Caching can store the search results for a few minutes, so repeat searches come back nearly instantly. But how do you decide what to cache? Simple: prioritize data with high access frequency—think trending destinations or top-selling items. Plus, you can fine-tune cache invalidation methods based on how often your data updates.

Some might think, “Row caching equals controversy,” but layering strategies make all the difference. In-memory caches like Redis or Memcached shine here—they’re blazing fast and versatile. But underneath, patterns like cache aside or cache aside per service can prevent common pitfalls like cache stampede or stale data.

Are there limitations? Sure—but weighing these against performance gains makes caching worth it. If done haphazardly, you risk inconsistency or memory bloat. The tricky part is balancing freshness with speed—sometimes, a slightly outdated response isn’t a deal-breaker, but in finance or healthcare, it could be.

What about real-world lessons? Think of big names in tech—they prioritize local caches for high-traffic data, combining that with distributed caches for global access. Speed and consistency dance together, and understanding your data flow guides your caching strategy.

So yes, caching in microservices isn’t just a checkbox—it's a nuanced craft. When executed thoughtfully, it turns sluggish sluggishness into swift responsiveness. It’s about blending technical savvy with a dash of smart planning, making your platform faster, more reliable, and ready to handle growth without breaking a sweat.

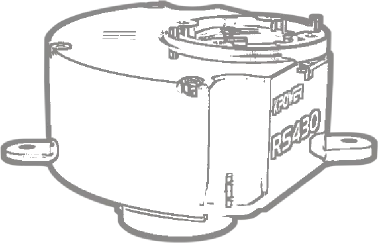

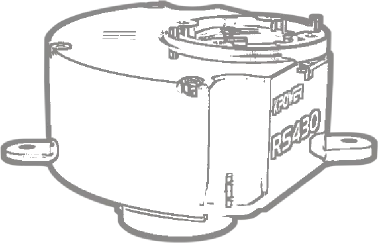

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

.webp)